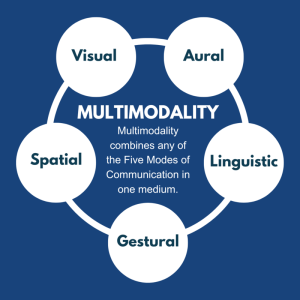

Multimodality in language learning proposes that effective communication and learning involve multiple channels or modes, such as visual, auditory, textual, and kinesthetic. This approach emphasizes that these different modes can complement and reinforce each other, leading to a more comprehensive and effective learning experience.

Core Components of Multimodality

- Various Modes of Representation: Language learning integrates several modes:

- Visual: Includes images, videos, diagrams, and written text.

- Auditory: Encompasses sounds, music, and spoken language.

- Gestural/Kinesthetic: Involves body language and physical interaction.

- Spatial: Pertains to the organization of space and layout.

- Linguistic: Covers grammar, vocabulary, and syntax.

- Synergy of Modes: These modes work together to enhance each other’s effects. For example, watching a video (visual) with subtitles (textual) while listening to the dialogue (auditory) can improve comprehension and memory.

- Contextual Learning: Stresses the importance of learning within real-world or simulated contexts, providing contextual cues through multiple modes.

Role of AI in Multimodal Language Learning

AI technologies enhance multimodal approaches by leveraging the strengths of each mode and providing a cohesive, integrated learning experience:

- Adaptive Learning Systems: AI customizes learning paths based on individual needs by analyzing learner data, adapting to strengths and weaknesses across different modes.

- Natural Language Processing (NLP): AI-driven NLP tools offer real-time feedback on language use, assisting with pronunciation, grammar, and vocabulary through interactive exercises.

- Speech Recognition and Synthesis: AI systems can recognize and generate speech, enabling interactive language practice with virtual assistants or chatbots.

- Visual and Textual Integration: AI can generate or suggest visual aids, translations, and contextual information to support textual content, enhancing understanding.

- Interactive and Immersive Experiences: AI-powered virtual and augmented reality (VR/AR) environments provide immersive learning experiences where learners can practice language skills in realistic settings.

Examples of AI-Enhanced Multimodal Language Learning Tools

- Duolingo: Uses AI to blend visual, auditory, and textual elements in lessons, providing instant feedback and adapting to the learner’s progress.

- Rosetta Stone: Employs speech recognition technology for pronunciation practice and interactive multimodal content.

- Google Translate: Delivers real-time translation and language support, integrating visual, auditory, and textual modes.

- HelloTalk: Links learners with native speakers for language exchange, using AI to facilitate conversations and provide multimodal resources.

Benefits of AI-Enhanced Multimodal Language Learning

- Increased Engagement: Multimodal content maintains learner interest and motivation by offering varied learning experiences.

- Improved Comprehension and Retention: Multiple modes reinforce learning, making it easier to understand and remember new language concepts.

- Personalized Learning: AI tailors the learning experience to individual needs, ensuring the most effective combination of modes.

- Practical Application: Immersive and interactive AI tools allow learners to practice language in realistic scenarios, bridging the gap between theoretical knowledge and practical use.

Multimodality in language learning, when enhanced by AI technology, provides a holistic approach that leverages multiple modes of communication to create a rich, engaging, and effective learning experience. By integrating visual, auditory, textual, and interactive elements, and personalizing the learning journey with AI, this approach caters to the diverse needs of learners, promoting deeper understanding and more meaningful language acquisition.

@mhsantosa (2024)